Google recently announced project Tango – a 3D mapping framework that will allow for simple scanning and virtual generation of a real world 3D environment. This project falls in the category of several sensing applications that utilize sensors such as Microsoft’s Kinect and PrimeSense’s 3D, Pelican Imaging, Softkinetic, and PMD that has emerged in the recent years.

Google recently announced project Tango – a 3D mapping framework that will allow for simple scanning and virtual generation of a real world 3D environment. This project falls in the category of several sensing applications that utilize sensors such as Microsoft’s Kinect and PrimeSense’s 3D, Pelican Imaging, Softkinetic, and PMD that has emerged in the recent years.

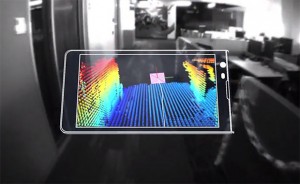

Project Tango includes SDK and a phone like sensing device that is similar to Kinect in functionality, although it appears to be missing a depth sensor and texture projector. These sensors allow the phone to make over a quarter million 3D measurements every second, updating its position and orientation in real-time, combining that data into a single 3D model of the space around the user.

This framework will be beneficial when creating augmented reality experiences, and I assume that there will be a close integration with Google Glass. I can see this being used in processes where a 3D point map of real space would be needed such as drone navigation in warehouses or augmented reality scenarios. Imagine creating a 3D point cloud of a factory floor, and incorporating various scenarios such as fire or falling piece of equipment that would play out when user physically approaches the trigger area. Such scenario would be great for training. Another example would be creating a scan of museum space, and loading various audio/visual material that would supplement existing exhibits. Such supplementary material would be visible with the aid of Google Glass or similar AR interface. Tango device could also be useful for 3D model generation, a topic we previously covered here, and here.

Tango offers a glimpse of things to come in near future. Google is doing a great thing here by developing a framework that will help the future generations of artists, scientists, hobbyists and other visionaries create new ways of interaction with our environment.